Data ingestion in Google Cloud Dataflow to Big Query without the headaches, part 2

If you haven’t already please read the part 1 of this series, otherwise the following will make much less sense.

I have added some additional features to the pipeline. It now has a configurable amount of time for retry attempts, records the datetime when the data was received (processed_timestamp) and dumps data which have passed the retry time into a “bad data table”. Lets explore what the new DAG looks like.

As you can see there is a new step which splits out data, into two groups. Data that will be retried and data that will be dumped into the “bad data table”. The bad data table contains the original data, bad_data, the target table in Big Query, target_dest, the specific error that piece of data failed with, error, and the time which the data was inserted into the bad data table, insert_time.

The retry mechanism, works by setting an integer for the number of minutes a message can be retried. Whenever a message is retried the retry number stored in the message is updated. This updated number is equal to the minute different between the originally received time, processed_timestamp, and the current time divided by the size, in minutes, of the processed time window. This effectively give us the number of times we have attempted to migrate the schema in the target table for Big Query.

A caveat to this is that the message may have gone around the retry step more than once, however because it has only been attempted to migrate a fixed number of times due to the time processed time window. It does accurately represent the amount of times the target table schema has been attempted to be changed.

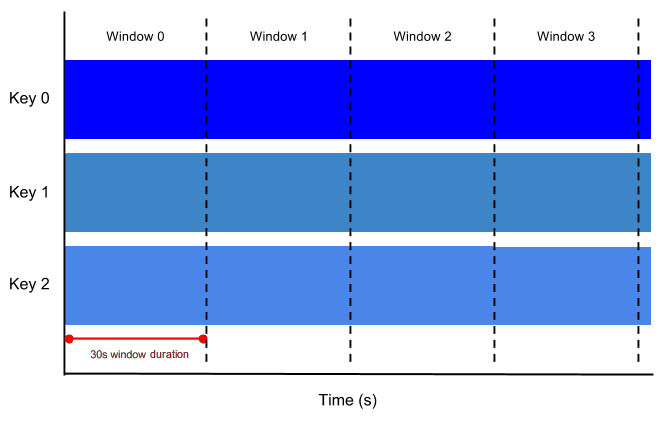

The processed time window for schema changes works by, creating a key, for the target table, and a value which is the combined new schema, which is the merger of the new incoming schema and the current target tables schema. This happens on a configurable time increment. This avoids updating Big Query for each piece of data with a different schema, but rather a combined window of changes. This combined window prevents a situation at when there a large number of recent JSON objects all with a new schema for target table attempting to update the target table. As things happen in parallel, many many updates could be triggered. Hence the combining, to achieve this in dataflow a Fixed Time window is used, read about them in Beam’s official documentation, or see below for a simple example.

Given the grouping of schema, changes the retry number is effectively the number of times the pipeline has tried to update the schema of the target table. The JSON object which is triggering this change could have gone round the pipeline many times or not many at all. If the pipeline is dealing with a large amount amount of data please set the window size to smaller value (1-5). With a smaller amount of data obviously you can have a larger window size, however setting a larger windows will increase the amount of time it takes to update the target table. If you are not sure stick with the default of 3.